This is the second of our 3 posts on improvements we’re making to service assessments. This post will focus on what we’re doing to make sure assessors and service teams have a consistent understanding of how to apply the updated Service Standard.

When we were writing the updated Standard last year, we did lots of research with people across government, so by the time we were preparing to launch it, we had a good idea of how it was going to be received. We knew that people generally welcomed the greater ambition and scope, and they understood the underlying intent, but there was some uncertainty around how to apply it to different situations.

Introducing assessor forums

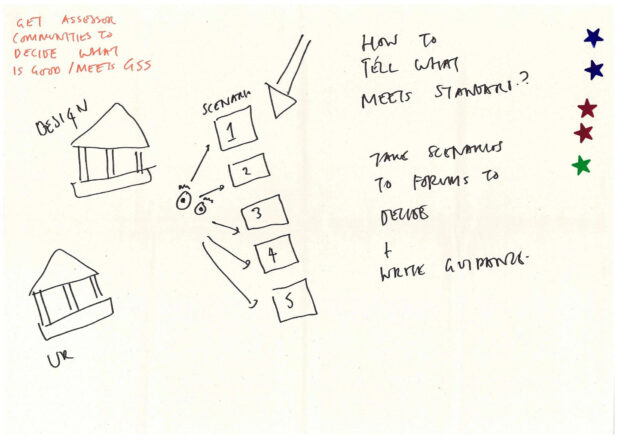

We decided to run a design sprint to surface our biggest risks with launching the Standard and decide how we’d address them. Our previous research showed teams and assessors sometimes have differing interpretations of the Standard so we were particularly interested in solving that.

During the design sprint, we developed the idea of assessor forums: a small group of assessors brought together to discuss the updates to the standard. The idea is to provide assessors with an opportunity to explore the questions they might ask when assessing a service against the new points, allowing them to get familiar with the changes to the Standard.

But it goes further than that: we (GDS) would use forums as an opportunity to hear more about how the Standard would actually be applied in practice. This turns the problem we found in our research of inconsistent interpretations of the Standard into an opportunity; reframing the inconsistency as a wealth of experience to inform iterations to the Standard and guidance, making them more applicable to more scenarios.

Applying the standard through role play

Our early pilots used more hypothetical examples as prompts for discussion, but we ended up falling down too many rabbit holes of ‘it depends…’ with no actual discussion. So we decided that the main activity of these forums should be a role play to give the assessors an opportunity to apply the standard to real scenarios. One assessor plays the role of ‘service owner’ and gives a presentation based on a service they’ve worked on, or read about in an assessment report. The assessors are then given the opportunity to think about what questions they would ask this service, particularly for point 2 and 3 at Alpha, and what responses they’d expect to hear. The ‘service owner’ then responds in the way they would in a real assessment (or close to it).

In a larger group, half of the room are ‘assessors’ and half of the room observe and comment later in a ‘wash-up’ discussion. People said they found this time for reflection on practice extremely useful. The purpose wasn’t to try and recreate an entire real assessment – this is difficult with one ‘service owner’ and 40 minutes – but to anchor reflections on the Standard points in a realistic scenario.

Assessors can still get involved!

To date, we’ve run these forums 9 times and iterated the format each time, to the point that we’re now confident it does help assessors deepen their understanding. We’re now encouraging departments to run these sessions themselves, and have developed some slides for which you can use to do this. We’ve love to hear what comes out of the conversations, especially if it could result in changes to the Service Manual or GDS support.

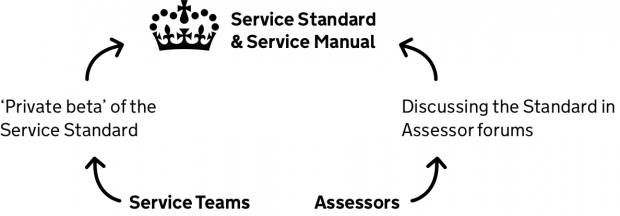

The long-term idea is to use these forums to bring assessors together more regularly to discuss what best practice looks like and gather insights on how we can improve the process and supporting guidance. This would create a feedback loop that mimics that of ‘users’ and ‘service team’, thinking of the assessors as one user group of the assessment service.

Our ‘Private Beta’ for the Standard

The other side of making sure that government is collectively ready for the updated Standard is, of course, service teams – the people actually building the services. GDS has a responsibility to ensure that teams in all departments are appropriately supported to be able to meet the Standard. So when we update it, that means checking in with teams to see how they’re doing.

Following the principles of running a good beta phase, we wanted to make sure we did this at a small scale first, to give us time to make any important changes that were needed before the first big wave of services coming in for an assessment. In other words, we wanted to run a ‘private beta’ for the Service Standard. We did this by inviting service teams that were starting Discovery phases to opt-in to being assessed against the updated Standard earlier than the mandated time. We had 6 teams opt-in, and we followed their progress by attending their show & tells by hangout.

The idea was that if we noticed any issues in their show & tells, we would intervene by routing them to guidance in the Service Manual that we thought addressed their problem. If it did solve their problem, we’d dig into why they didn’t find that guidance themselves—as they would have needed to if they weren’t part of our Private Beta. If the guidance didn’t solve their problem, we would route them to the right support function (service design support, consulting technical architects, GDS platforms etc.), learn from that engagement and then amend the guidance accordingly. Creating a feedback loop, just as with the assessor forums, but this time between service teams and our support offering.

So far we have had one opt-in team come for a GDS alpha assessment: the Department for Education service was given a ‘met’ against the updated standard and have since written a blog post about their experience. We also have another alpha booked in for November.

What we’ve learnt

Neatly for us, similar findings came out of both these research approaches.

Understanding point 2 of the standard

The main thing is that people found it difficult to understand the distinction between point 2: ‘Solve a whole problem for users.’ and point 3: ‘Provide a joined-up experience across all channels.’

Both points are around the scope of work a team has done, but 2 is more around making sure the service is designed whilst considering the actual goal the user is trying to achieve, whilst 3 is about thinking wider than just the digital bit. To better understand the confusion, we separated discussions about the points in the forums and found that people actually largely understood point 3, but misunderstood point 2 when they were grouped together. People thought 2 and 3 were about the same thing, with the ‘whole problem’ being interpreted as meaning ‘every channel’ rather than the users’ goal which is what we intended.

Solving a whole problem – start small, think big

When people did get a handle on what we meant by ‘whole problem’, they still got hung up on the word ‘solve’ and the scale of the problem to be discussed in an assessment. This is understandable; if a department only has control over one part of the user’s problem, it would be far outside the scope of any single digital project to restructure government. Service teams are generally just tasked with getting their thing delivered. However, we should be working towards a government that is structured around services as users understand them, as we know that leads to better outcomes.

We see the Standard as a catalyst towards this long-term vision by encouraging people to think big but make pragmatic steps towards how things should ideally be. An excellent first step might be to set up a Service Community.

All this is an ambition that the words ‘Solve the whole problem for users’ might not fully convey, so we’re currently testing out some ideas for rephrasing and iterating the point itself.

We’re actively feeding the learning gained from our research on the assessments process into the Service Standard and Manual team: this has helped to inform the questions asked in assessments and will also help to iterate future guidance.

Shout out to the assessor community

Another thing we observed in the forums was just how valuable it is to get assessors into a room to talk about assessments! Assessors rarely get the chance to do this, particularly with assessors outside of their department. It’s been a great opportunity to build relationships and we’re excited about the positive effect this could have on the cross-gov assessor community.

Lastly, we want to say a big thank you to everyone who has taken the time to help us with our work to date. We appreciate your support and continue to use your feedback to develop guidance and help improve government services.

If you’d like to take part in future assessor forums, keep a lookout for invites from the assurance team over the next couple of months.