This is the third of our 3 posts on improvements we’re making to service assessments. The first was on operational improvements and the second was about helping people to understand the Service Standard. This post is about our venture into a new frontier for GDS: Life after the Live assessment.

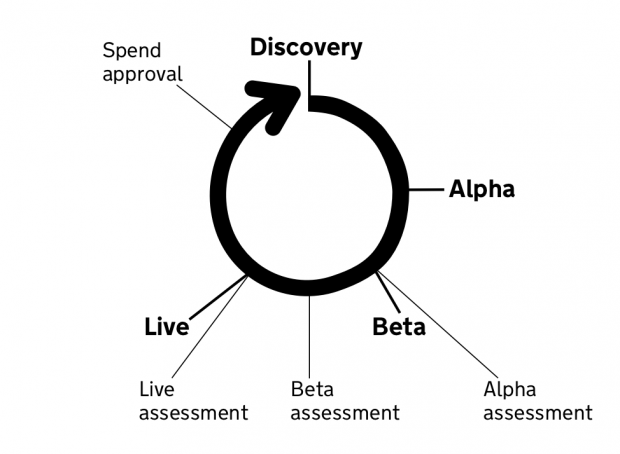

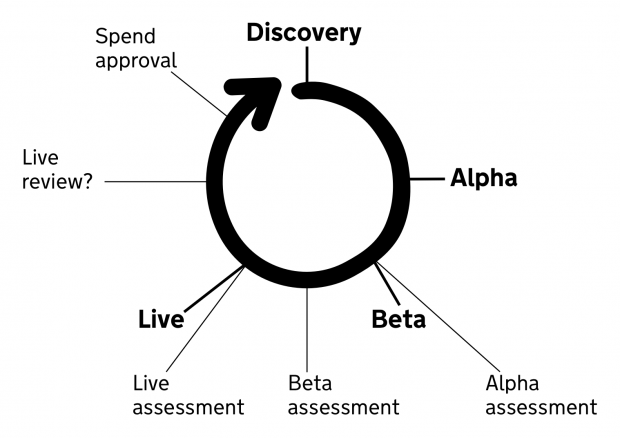

The GDS assurance process is designed to fit around the agile phases. We do assessments at the end of Alpha, the end of Private Beta, and the end of Public Beta going into Live. After that last assessment, we don’t currently have any planned formal engagement with service teams. That is, until the service puts in a new spend approval bid.

We’ve been wondering recently if this works. The Live phase is when users really rely on a service, and where legacy services are decommissioned, so some problems are only found at that point. In addition, conditions external to the service change over time which sometimes means a service that was once meeting all users’ needs and expectations becomes no longer fit for purpose.

The Service Manual says that “the Live phase is about supporting the service in a sustainable way and continuing to iterate and make improvements,” but GDS has no formal process of ensuring this happens. Traditional service management too often focuses on maintenance and support functions, and fails to provide the level of continuous improvement expected in modern government services.

Understanding the problem

The Service Standard expects the Live phase of a service to feature ongoing continuous improvement – building on the work in public Beta, and continuing to learn about and improve the service, to prevent it becoming new legacy.

However, we’d heard anecdotally that this isn’t always done consistently. So we decided to talk to some teams and find out if this was actually an issue or not. We interviewed 4 teams and combined what we learnt from talking to them with our own understanding and experience of this space.

We used a knowledge kanban to track our learnings as the research progressed, then got to a stage where we felt we could reflect back some findings:

- some services will have been handed over to a centralised team that will have the responsibility of running and maintaining it

- we’ve seen that these live service teams are very different shapes and sizes, from a team of around 30 people that looks after 35 services, to a ‘team’ of around 800 people that looks after one service. This is because services are all very different!

- the problems these teams are solving are all very different too. Some are operational/scale fixes, some are improvements to keep up with changes in best practice (for example, design patterns), and then sometimes services need to completely rethink whether their service should exist in response to some change in an external circumstance

- these variances could indicate an opportunity to share best practice across Live service teams – something which assurance can help do.

We also reflected back from our knowledge of pre-Live assessments that:

- Alpha service assessments often don’t refer very much to the existing live service that is intended to be replaced

- new services are often integrated with pre-existing legacy platforms or technology. Decommissioning these legacy systems will achieve savings, but is often seen as a complex or intractable problem

- Live assessments are incredibly rare. Most services assessed by GDS have not moved beyond Public Beta. We think this is partly because teams feel if they move into Live they are more likely to lose people or money, as the service is moved into “business as usual”

- there are also many services that, for a variety of reasons, have not been assessed by GDS at all. One common reason for this is that they were built before GDS existed and a replacement hasn’t been prioritised

- some teams see assessments as little more than a blocker to launching their service. Assurance really should be about making sure the right thing is built the right way rather than slowing teams down, but sometimes the experience can go against this ideal. Sometimes even assessors contribute to this problem by prioritising stopping bad things in their tracks instead of giving useful feedback to help teams deliver the right thing.

The answer? Live service reviews

Getting more of a sense of this problem space led us to a hypothesis: GDS could address some of this by facilitating assessment-style reviews on Live services.

The idea is to have a peer review before risks become real issues, and to support teams to scope spend requests that result in good Discovery projects. As with other assessments, the report provided at the end of the Live review would provide external feedback on their progress to date. This would include praise for good elements of their service, suggestions for improvements, and broader feedback on ways of working that could be used as leverage for teams to address barriers they have faced.

We’re blogging about this early, as it’s still an untested idea. Our intention is to test this approach with some pilots to establish what is the best, most useful way of supporting teams in this phase. But we have some thoughts about how we think the format will work:

- we think these Live service reviews could look like Live service assessments: key members of the team sit down for half a day with a multi-disciplinary panel of assessors gathered from other government departments

- the pilots need to explore different options for reviewing operational issues for the service. This might be via a dedicated section of the assessment agenda, or by having a new type of assessor: an Operations assessor experienced in the effective and efficient running of Live government services

- potential areas to explore would be all of the things listed in the Live phase guidance in the Service Manual. In addition, there’d be a renewed focus on proving the service is continually improving outcomes for its users, and meeting its policy objectives. It’s important that Live services are able to demonstrate not only that they’ve met user needs (e.g. users are able to access training), but also that the service has produced the intended outcomes for their users (training has helped the user address the problem identified in the policy intent). This is particularly where point 2 of the updated Standard plays its part

The hypothesis is that this would solve the problems we’ve identified with Live services stagnating until they fall over, whilst also enabling services to complete Beta quicker as assessors would see that the team will continue to be prompted to fix any issues with the service after they go live.

But what about Live Service Assessments?

This still leaves the issue of services who aren’t moving into Live at all because they stay in Public Beta.

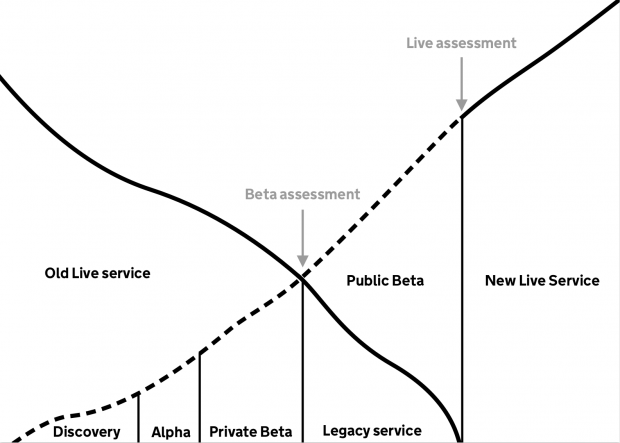

Part of the issue here is people misunderstanding what Public Beta is. Really, if an old service is replaced by a new one, the new service becomes the ‘Live’ service as soon as the old service is turned off. This should happen at the same time as a Live assessment.

In reality, we know that this isn’t happening. Departments are often switching their Legacy services off whilst the new service is still in Public Beta, meaning the de facto New Live Service has never passed (or often, even attended) a Live assessment.

This is difficult to influence at GDS because running both a legacy service and a new live service means twice the expenditure. Of course we don’t want to tell departments to spend more money unnecessarily, as we’re here to help departments to save money by building better services. It really is a department's choice if they feel confident enough in their new service to turn off their legacy service, but we do think that running a Live service assessment is the best way to get that confidence from external peers.

What we can do as GDS is make sure departments don’t have to wait very long for a service team to attend a Live assessment. We’re doing this by making it a recommendation included in all Beta assessment reports that the service team books in their Live assessment immediately on receipt of their Beta report. The panel will decide the recommended time frame between the Beta assessment and Live assessment, but the default in the report template is 6–12 months.

This means that all services that pass their public Beta should be sitting a Live assessment within a year, unless they have a good reason not to. The immediate impact of this is that we can be more confident in switching off our legacy services, but we think there’ll be a side benefit of more services passing their public Beta assessment too, because assessors will be more confident that the team will receive more feedback soon afterwards. This idea is then extended into the Live service reviews.

It’s still early days, but our early thinking makes us feel confident that these changes to the assessment process will help teams go Live quicker with better quality services, and ultimately facilitate the progressive spiral of delivery – continuously improved outcomes for people.

We’ve got a few departments interested in trialling this process to complement the existing spend controls conversation they’re having with GDS. If you’d like to be involved in this as well, you can contact the GDS assessments team at dbd-assessments@digital.cabinet-office.gov.uk.

From 3–7 February, Services Week 2020 gets people from all parts of government to discuss how we can work together to deliver end-to-end, user-focused services. Follow #ServicesWeek and join sessions in person or remotely.

If you work in the public sector, take a look at the open agenda and find a session in your area that interests you.