We often fail to test the things we’re uncertain about and instead spend a disproportionate amount of time on the things that are givens. This leads to long explorations, without conclusive evidence to make good, timely investments. We can avoid this by prioritising our assumptions by their risk, then testing them. If you are working in a big problem space, doing this will focus your service team on learning the things with the most value.

In this blog post, I’m sharing how you can use this method with your teams. Plus, you’ll get to make your prioritisation more like being a judge on a reality TV show, Strictly Come Dancing-style. This is way more fun than any effort-and-impact priority matrix.

This is the first of two blog posts. The second blog post will be about the value of testing riskiest assumptions and will be published soon. It will discuss the benefits of using the method, with some examples of when it’s been valuable.

Why it’s important

Exploration can drag on when we don’t spend enough time learning about the uncertain, especially in a big problem space. We might make progress on solving some problems, yet we’re still no closer to a usable service we can operate, or having an impact.

When starting the discovery phase for a service, we often don’t know where to begin our research. It’s great when people have different hunches. We see this particularly in teams with many disciplines. Yet it can be hard to know what to focus on when trying to solve a whole problem for users.

When a discovery phase throws up lots of problems, we don’t know where to start our alpha phase. We know we need to design a few user journeys from start to finish. We could say start at the beginning or start anywhere, as it all needs designing.

Many alpha phases take much longer than the suggested 6 to 8 weeks. We still don’t feel confident about what our service should look like in the beta phase. We’re left in perpetual exploration, like me digging for records in a shop basement, but less fun. This can happen when we mistakenly spend all of our time according to what is most valuable for the user. Value for users is incredibly important, but there’s a nuance to it.

We should prioritise spending time on things we most need to learn about. The things we most need to learn about might be different from what has the most value for users. We don’t always make this distinction, and in the end, value for users suffers.

How to prioritise assumptions by risk

It’s ok to have hunches. Everyone has them. Hunches are only dangerous when we don’t test them.

We form hunches about how to solve problems from a range of assumptions. Sometimes, our assumptions are informed by spotting patterns in our experiences. Other times, we overreach and that’s when, to quote the old saying, “To assume is to make an ASS out of U and ME”.

Prioritising assumptions by risk helps us focus on where they might be overreaching and prevents never-ending exploration. The first step is called assumption surfacing, then later when testing them, riskiest assumption testing (RAT).

How to do it:

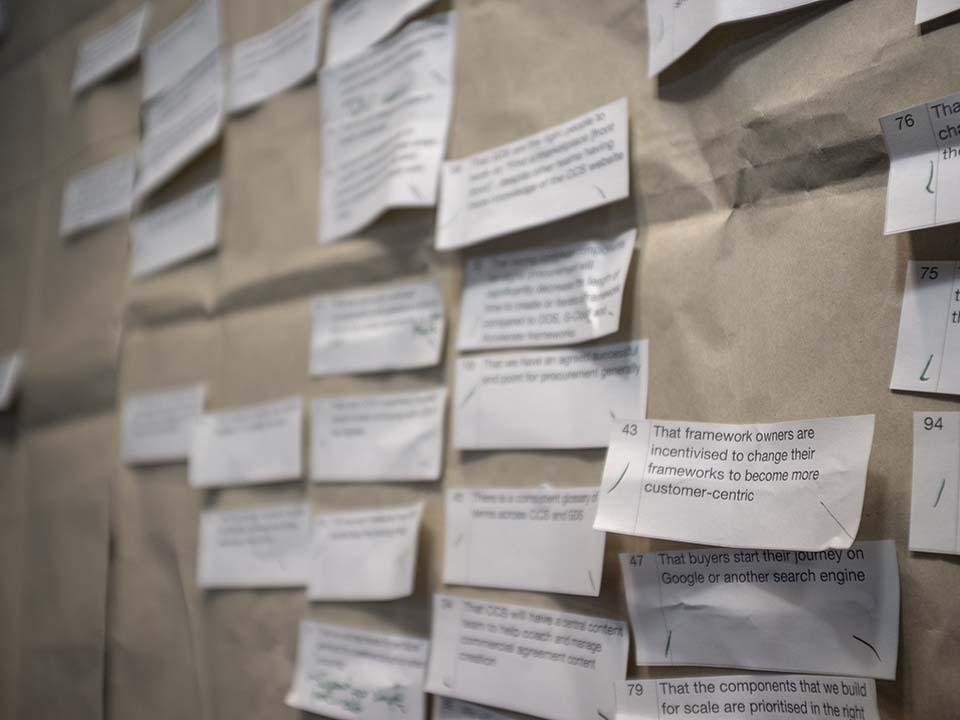

- Everyone writes down their own assumptions as well as assumptions they’ve heard. Cover the service, product, users, business case, market opportunity, technology, delivery approach and stakeholders.

- Everyone writes down what the consequences of their assumptions being wrong would be.

- Everyone writes down what existing knowledge they have to inform their assumptions. This could be things that suggest it to be right or wrong.

- Get everyone’s assumptions in one place and combine any duplicates.

- Privately, each person gives each assumption two different scores out of 10. The first is “impact if we get this assumption wrong”, the second is “confidence I know this is right or wrong”.

- Meet as a team. Read out each assumption. Channel your favourite Strictly Come Dancing judge and reveal your individual scores. Agree consensus scores for impact and confidence. Top tips: time box discussion about each assumption to a minute and gather all the existing knowledge in one place.

- Give each assumption a risk score. Risk score = impact × (10 - confidence). 100 is the riskiest assumption, 0 is the safest assumption. Inverting confidence means assumptions we lack confidence in produce higher risk scores.

- Sort the assumptions by their risk score.

I’ve given a simple example below, with a throwback to a 2004 design icon – the Motorola Razr mobile phone. The overwhelming sense of nostalgia should help the method feel less intimidating. Imagine our team are trying to figure out how to make the next smash hit smartphone…

| Assumption | Consequence if wrong | Existing knowledge to mitigate risk | Impact if wrong | Confidence we know the answer | Risk score |

| People would buy a folding smartphone | We lose money on our investment | The 2004 Motorola Razr was popular | 8 | 4 | 48 |

| We can produce folding glass screens | We don’t have a unique selling point | We have low-resolution prototypes | 7 | 2 | 56 |

If you’re reading the example, thinking, “no way is that a 4!”, perfect. You know something that I don’t. You can imagine our team discussing how popular the Motorola Razr was. We’d debate how similar our folding smartphone is.

Our team now has a list of assumptions, prioritised by risk. Now we can agree as a team what we need to quickly learn, experiment and test to help mitigate those risks. This will help form a good backlog for a discovery or alpha. This method can also help in other situations when you're working in big problem spaces with lots of uncertainty, and don’t know where to start.

What to remember

- When you’re in a big problem space, prioritise your team’s learning according to your riskiest assumptions.

- Use this method to avoid spending too long on discoveries and alphas.

- Anyone can get started, but do it as a team to learn from each other for a more informed prioritisation.

- Ask your team “what is the quickest way to test our riskiest assumptions?”

Further reading

- The MVP is dead. Long live the RAT by Rik Higham was my first introduction to using the method

- Assumption Surfacing by Mycoted is a good way to look at the risk of decisions already made

Learning about weighting scores

When we discussed the method, Georgina Watts was curious about weighting the impact and confidence scores. For example, we could weight impact higher than confidence. This might be useful with a group of people that tend to be overly-confident, or don’t talk about failure.

I’ve only used a 50/50 weighting. I'd be curious to hear from others that have experimented with other weightings. Please leave a comment if you want to share your remix.

4 comments

Comment by Martha posted on

Thank you for sharing exactly how you did this - super useful. I am going to try this with my team!

Comment by Harry Vos posted on

Thank you Martha 😃 I’m glad you found it useful. Hope it goes well. Let me know how you get on

Comment by Alex (Softwire) posted on

Thanks for sharing. We've been doing this for a while, but without explicitly drawing out the evidence aspect. It's a nice build on the idea

One thing we do is use a wuandrant model - we find it easier to use positive + negative I feel confident, I feel unconfident about that, and it replaces scores with visual location.

You can then deal with things as the four quadrants (priority to investigate, check you're correct, treat as axioms, defer till later) clockwise from top left with impact as vertical axis and certainy as horizontal

Comment by Harry Vos posted on

Thanks Alex! 🙌🏻 I love a good 2x2 and I especially like the way you’re categorising each quadrant